- Published on ·

- Time to read

- 29 minute read

AI Coding Agent Showdown: 10 Top Tools Compared

- Authors

- Name

- Patrick Hulce

- @patrickhulce

tl;dr - I built the same SaaS product 10 times with 10 different coding agents to find the best of the best.

- OpenAI Codex Review

- Claude Code Review

- Gemini CLI Review

- GitHub Copilot Review

- Devin Review

- Cursor Review

- Cline Review

- Aider Review

- v0 Review

- GitHub Spark Review

I just got back from paternity leave, and let me tell you, I was sweatin' bullets about what I'd be coming back to.

I don't think there's been another six-month period in my lifetime where the practice of software has changed so radically. While I was knee-deep in diapers and Disney+, apparently everyone and their mama moved from AI autocomplete to AI agents writing entire PRs for them. The Fear Of Losing My Awesome Career (FOLMAC?) was real y'all.

So naturally, I did what any coding lover would do and spared no expense testing TEN different AI coding agents to see if I should start updating my resume or if we still have a few years left before the robots come for us all. Now, I know there are plenty of leaderboards and benchmarks out there that test these things on obscure algorithms in twenty different languages with multiple attempts and all that jazz, which you're free to stop reading here and look at those instead if you like (leaderboard, leaderboard, leaderboard). In the true spirit of vibe coding though, I'm basing these reviews on something much more practical: vibes.

I tried to build the exact same boring SaaS product ten times, once with each tool. It has all the usual suspects: some auth, a Postgres DB, a CRUD REST API, even a marketing landing page or two. You know, the stuff so many of us actually get paid to build instead of leetcode puzzles 😅.

Let's see which of these silicon overlords deserves our allegiance (and perhaps more importantly, our credit card numbers).

The Setup

For this grand experiment, we're building Hulkastorus, a fictional Google Drive competitor that absolutely nobody asked for. Hulkastorus is complex enough to be interesting but boring enough to be realistic.

To make the comparison fair across tools, I established a few ground rules:

Identical starting point. Each agent received a Git repository pre‑populated with the same Next.js 15 starter, including shadcn for UI components, Tailwind for styling, Jest for unit tests, and Playwright for end‑to‑end tests. I originally planned to ask them to bootstrap the project from scratch but that failed so epically I had to revert because, well, as we’ll see, that turned out to be a fool’s errand. I wanted the focus to be on implementing features.

High-quality, identical context. I developed a theory while reading about my impending obsolescence on Hacker News all summer: these coding agents, much like the junior engineers they're compared to, would be far more effective if we give them the same information I'd be giving a real human at a real job. You know, actual product requirements and technical design documents instead of just "make button go brrr." Accordingly, I wrote two comprehensive documents:

PRODUCT.md, which contained user stories, acceptance criteria, and a feature list, andSPEC.md, which laid out in detail the recommended tech stack, database schema, architectural patterns, API routes, pages, components, and even wireframes of each product surface. I also authoredTASKLIST.md, a breakdown of forty‑five tasks that roughly correspond to JIRA tickets you might see in a real project. These ranged from "Implement user login with JWTs" to "Add file upload modal," nice, bite-sized pieces for an agent to chew on without churning too long.Minimal prompting and intervention. I interacted with each assistant using its native interface: CLI, IDE plugin, or web UI. I issued a high‑level instruction from

TASKLIST.mdor something brief and direct like “fix the failing unit tests.” I tried to avoid micromanaging as much as possible. Afterall, I'm judging them on how much I have to micromanage them!Timebox. For each tool I invested about half a day of wall‑clock time, depending on how quickly it progressed. I ended the session when the agent was clearly stuck, or when it had completed enough tasks to form an opinion.

Cost tracking. I paid for each tool’s paid tier where necessary. I recorded the approximate costs necessary to build Hulkastorus in its entirety with each (even if I didn't make it that far), including subscription fees and pay‑per‑use compute. This is not a scientifically precise economic analysis, but it’s enough to give a sense of relative expense.

With the rules of competition in place, let's meet our participants.

The Contenders

We'll start with the archetypes. These tools basically come in three flavors ranging from "oNlY lOsErS uSe A GuI--ViM 4eVeR!" to "your grandma could score a Series A":

- Terminal Agents: command-line-based tools for the hardcore developer who lives in Vim.

- IDE / IDE Extensions: agents that integrate directly into an IDE (usually VS Code or a VS Code fork).

- Web UIs: browser-based tools that often focus on generating entire projects from a natural language product sentence.

Many of these actually offer multiple flavors as well, lest they lose out on absorbing a single developer to the competition! It was tough to narrow down the ever-growing field of available tools to just a handful that I would actually use myself, so I tried to grab a variety of styles and prioritize the ones I had heard mentioned the most in various conversations. I wound up with the following list:

Let's get coding.

OpenAI Codex

- Cost to Build: $20 (Plus plan)

- Rating: ★★☆☆☆

- Documentation / Codebase

Codex is OpenAI's coding agent available as both a web UI with a cloud-based sandbox and as a CLI. For access, you can reuse your ChatGPT subscription or use an API key for pay-as-you-go.

Experience

Maybe my expectations were too high from all the GPT-5 hype, but my journey with Codex was...rocky. The cloud version immediately face planted on step one because the sandbox environment didn't allow access to shadcn's domain for npx shadcn add button. Even once I added to the whitelist, it couldn't even open a PR at all because it interpreted one of the diff'd files as an application/octet-stream and surrendered immediately. Welp, I guess that's the end of that test.

The local experience wasn't that much better. Codex CLI is extremely verbose. I appreciate them trying to find some balance between the magic and transparency but viewing all reasoning tokens by default probably ain't it. The commit messages it generated also had escaped newlines (\n) instead of actual line breaks, making the git history look like a ransom note.

Then came the security horrors. It proposed storing user passwords in plaintext. 😱 When I pointed this out, it tried to create its own crypto library from scratch rather than just pulling in bcrypt or heck even something in node's crypto library.

Then, when I told it to fix the failing E2E tests, it just turned them off instead. That's not fixing, that's giving up! And don't even get me started on its attempt to work with Prisma.

// Actual code it produced, I can't make this up.

export type PrismaLike = {

user: {

create: (args: Record<string, unknown>) => Promise<Record<string, unknown>>

delete: (args: Record<string, unknown>) => Promise<unknown>

}

}

let PrismaClientCtor: { new (): PrismaLike }

try {

const mod = (await Function('m', 'return import(m)')('@prisma/client')) as unknown as {

PrismaClient: { new (): PrismaLike }

}

PrismaClientCtor = mod.PrismaClient

} catch {

throw new Error('Prisma client not available at runtime. Ensure @prisma/client is installed.')

}

The most amusing shit-show was when I asked it to fix the end-to-end failing tests. Instead of just, you know, using the AGENTS.md description of the proper call or looking at the package.json file, it would try a shotgun blast of every conceivable test runner command it could think of.

# Actual tool call it ran.

echo '--- try common test commands ---' && \

(npm run test:e2e || \

pnpm test:e2e || \

make test-e2e || \

npm run e2e || \

pnpm e2e || \

make e2e || \

echo 'No common test command worked')

When the tests contained failing output, it finally concluded that the tests were broken and just removed npm run test:e2e from the package.json file 😂.

Bless your heart, Codex, you tried. GPT-5, savior you are not.

Claude Code

- Cost to Build: $100 (Max plan)

- Rating: ★★★★★

- Documentation / Codebase

Claude Code is Anthropic's CLI-based agent. It's quite slick, with helpful completions, fancy animations, and funny phrases while "thinking". The initial experience on the code side was incredibly impressive as well.

Experience

This agent started out like a house on fire. Getting actual tasks done was a breeze. I'd ask for some placeholder content, and it would generate something that was shockingly close to the final design. When I asked it to set up Prisma, it not only configured the schema but also added the client import and a small test to verify the connection. It correctly chose to install bcrypt for password hashing. It felt... intelligent.

But that intelligence was occasionally a double-edged sword. Claude had a tendency to go a little too far, creating overly specific assertions in its tests that it then wasted millions of tokens trying to fix later. Claude also opted for a complex database-backed session management system for auth instead of simple JWTs (which is actually what I asked it for in SPEC.md), which it then struggled to properly manage later on.

The biggest issue, and a theme we'll see again, became evident as time went on. The model was stellar at first, but as the codebase grew, its mistakes compounded. By the end of the day, it got stuck for a full five hours trying to fix its own unit and integration tests. It would declare victory; I'd run the tests with failures I'd point out; it'd go "You're absolutely right"; run for another hour or so to fix them; declare victory again; and repeat the cycle. Poor chump, seven times it thought it had fixed the problem before I finally had to put it out of its misery.

Test misery aside, I was still very impressed and could see myself using Claude Code for a lot of my work on unfamiliar codebases, with the right guidance and intervention of course.

Gemini CLI

- Cost to Build: Free (for now)

- Rating: ★★☆☆☆

- Documentation / Codebase

Google's entry into the CLI agent space feels very much like a clone of Claude Code, right down to the fun and silly phrases it uses while "thinking." It auths with your personal Google account and has a generous free tier.

Experience

The clean loading messages were really nice, but the pleasantries ended there. On its second step, I immediately hit quota warnings with failed requests. The free tier for the 2.5-pro model seems to be... low.

Once we got stuck with the flash model, Gemini never really had a chance. It struggled to create basic Next.js pages that used the request object, a fundamental part of the framework and had a tendency to go completely off the rails. At one point, instead of fixing a few lint errors, it just removed the eslint command from the npm test script.

The real face-palm moment came when it encountered a "module not found" error because it had hallucinated an extra src/src/ in a single file's import path. Instead of fixing that one line, it decided the correct course of action was to rewrite every single import across the entire codebase to remove the @/ path alias. An absolute disaster.

Its final act of desperation was trying to fix a type error in a DELETE API route. After failing to understand the Next.js route segment config even when I gave it the documentation, it just... deleted the file.

│ Type error: Route "src/app/api/v1/users/[id]/route.ts" has an invalid "DELETE" export

│

╭───────────────────────────────────────────────────────────────────╮

│ ✔ Shell rm src/app/api/v1/users/[id]/route.ts (Remove the ... │

╰───────────────────────────────────────────────────────────────────╯

I... I have no words. Sorry, Gemini, but I've seen enough; you're done.

GitHub Copilot

- Cost: $10/mo (Pro plan)

- Rating: ★★★☆☆

- Documentation / Codebase

Copilot is Microsoft/GitHub's flagship coding assistant that you've probably been familiar with for some time offering code completion and basic chat. They've preserved that name while significantly stepping up its capabilities with agentic coding in VS Code, a cloud coding agent, a CLI, and a PR code reviewer on GitHub. Copilot offers rate-limited frontier models from many providers but good luck finding out what those limits really are.

Experience

Copilot is the Honda Civic of AI coding agents. It's not flashy, but it's affordable and gets the job done. The VS Code plugin experience is solid with ample opportunity for rules and customization.

It respects .github/copilot-instructions.md files, directory-specific instructions, web-modified instructions, and situation-specific rulesets in the .github/instructions folder of the repo. But the agent implementation is clearly not on par with our other contenders so far. Copilot failed to pull in the right context as often, doesn't test or lint before committing as often, and made absolutely the laziest commit messages of the bunch. All of these are fixable with better instructions of course, but the point of this test in many ways is how much of the software prompting are you saddling me with! It's nice when the tools just do the sensible thing without being asked, you know?

I also expected the whole "we own the entire IDE" thing to offer a significant advantage to Copilot, but instead it actually made things worse. For example, I asked Copilot to "fix the failing tests" and it happily reported that all tests were passing, but that's just because VS Code hadn't properly picked them up and registered them. It's neat that you integrated Copilot to read VS Code's test panel and all, but that doesn't mean you should completely ignore everything that's not integrated into VS Code, c'mon! Seems like someone needs to tone down their self-confidence, at least Claude Code knew enough to run the damn tests to find out which ones I'm talking about.

Devin

- Cost to Build: ~$250 (~$5 per PR)

- Rating: ★★★☆☆

- Documentation / Codebase

Devin is the slick, VC-backed "AI software engineer" (which also happened to recently acquire and then layoff the Windsurf team). It's a hybrid chatbot/IDE in the cloud, and its pricing/rate-limiting is the most straighforward (and expensive) of the bunch. You pay per "ACU" (Agent Compute Unit), and it's very much not subsidized. Just running git status cost me 8 freaking cents!

Experience

I must say though that the product experience is quite fantastic. They really want to make sure you follow best practices before getting to run any prompts. First, Devin walks you through setting up your repo, configuring test commands, and defining the project scope. Then, and only then, can you assign it a task to complete. When it completes a task, it opens a pull request on GitHub. If your CI checks fail on that PR, it automatically tries to fix the issues and pushes again until tests pass. This is, without a doubt, a glimpse of the future.

The code quality was pretty high, on par with Claude Code, which leads me to believe it's mostly leaning on Claude 4 Sonnet under the hood.

But for all its polish, it still made the same rookie mistake as Codex: happily storing plaintext passwords without a shred of remorse. For a tool that costs this much and markets itself as an autonomous engineer, that's a pretty glaring and unacceptable oversight. It's a great product, but the underlying agent isn't magically better than the others, and the cost is so steep even for simple changes that I can't imagine using it for any work for which I'm footing the bill.

Cursor

- Cost to Build: ~$40 (Pro plan + credits)

- Rating: ★★★★★

- Documentation / Codebase

Cursor is a fork of VS Code that's been supercharged with AI features. It has an agentic IDE extension like Copilot/Cline, an experimental CLI like Claude Code/Codex, AND a web agent mode similar to Devin/Codex. It bundles access to all the top models, though recently went through some controversy over switching from unlimited with generous rate limits to a credit model of pay-as-you-go.

Experience

Before my leave, I had been using Cursor a lot already for its amazing Tab model. I'd messed around with some of the agentic features to mixed success, so I was shocked at how far they've come; this was a standout. The "Auto" model mode, which lets the agent pick the best model for the job, was incredibly fast and effective. In fact, the one time I tried to override auto and force it to take advantage of free GPT-5 tokens, GPT thought for 47 seconds just to decide to run git status. On auto, Cursor didn't get sidetracked or stuck in loops nearly as often as the other agents. The IDE integration is another massive advantage; it can automatically pull in failed test output from the terminal you're already using to inform its next steps.

BUT... et tu, Brute? Even the king is not without sin. After a nearly flawless run on the first few tasks, the trifecta of AI agent failures emerged:

- It stored the user's password unhashed in the database (though unlike the others, did leave a FIXME comment at least 😂).

- When unit tests were failing, it "fixed" it by adding

|| trueto the command, forcing it to pass. - It ran into a type error and decided to overwrite the TypeScript definitions of a library to match the API it wished the library had, instead of just using it correctly.

Despite these somewhat major stumbles, the overall experience was the smoothest and most productive of the bunch. Importantly, Cursor was almost always the quickest to getting back on track once these issues were pointed out. I'll definitely continue to take advantage of Cursor moving forward.

Aider

- Cost to Build: Free (BYO API Key)

- Rating: ★★★☆☆

- Documentation / Codebase

Aider is another CLI tool, but this one is open-source and model-agnostic where you bring your own API key (from OpenAI, Anthropic, Google, OpenRouter, etc). I used it with a free DeepSeek R1 model to see how the open-source world is faring.

Experience

Aider's paradigm is pretty different and a bit jarring coming from the model provider backed options. It explicitly separates discussion/planning from coding It feels less like an agent you delegate to and more like an incredibly powerful typing assistant. In some ways, this was refreshing. It never gets too far ahead of you, and it automatically commits its changes after every single successful task, which is a fantastic way to handle things.

The downside is that these choices result in some pretty limited capabilities, and I really missed how much context the other tools were automatically pulling in for me. Additionally, the DeepSeek model I tried wasn't nearly as capable as the Claude frontier models. DeepSeek hallucinated file paths, failed to set up Playwright's browser dependencies, and has no tool use, which severely limits its ability to interact with the outside world. Since you're also paying-as-you-go, Aider also really highlights just how many tokens these agents burn through. Three small tasks totaling ~200 lines of code change consumed nearly 2 million tokens, thank goodness I wasn't trying it out with Opus 😅

Cline

- Cost to Build: Free (BYO API Key)

- Rating: ★★★★☆

- Documentation / Codebase

Cline is an open-source VS Code extension that brings agentic development patterns into VS Code. Like Aider, you bring your own API keys.

Experience

The overall experience with Cline was excellent and impressive for an open-source option. The documentation is both practical and insightful and was the best of any agent I tested with great advice on how to manage context and work effectively with agentic models in general. The UI is clean, with great transparency on token usage and cost. You can define rules in markdown files (.clinerules) to guide its behavior, and it provides clear settings for auto-approval limits to prevent it from running amok.

I tested Cline connected to OpenRouter using the free DeepSeek R1 model, so it suffered from some of the same subpar coding recommendations as Aider, but that's not Cline's fault. As a product and an interface for working with these models, it's a superior experience to Aider and a fantastic tool.

If you're looking for agentic development on the cheap, Cline+OpenRouter R1 is it IMO!

v0

- Cost to Build: $20

- Rating: ★★★★★ (for early UI), ★☆☆☆☆ (for anything else)

- Documentation / Codebase

v0 is Vercel's generative UI tool. You describe an interface, and it spits out React code using shadcn/ui and Tailwind CSS.

Experience

Vercel's deep connection to the frontend ecosystem shines through with v0. The product and design sense of v0 is by far the highest of the models and bests even a lot of humans I know. So much so, that contrary to every other model, my results were significantly better when I was less specific in my prompts. If I just said "make a file storage dashboard," it produced a beautiful, polished interface. If I tried to give it detailed instructions from my PRODUCT.md, the results got worse.

Yes, everything it produces has that generic "modern Silicon Valley SaaS" aesthetic, but that's not necessarily a bad thing. It will make your app feel polished even if you have the design sense of a drunk skunk.

However, it's absolutely a one-trick pony; iteration attempts for backend functionality and tests were hopeless in their proprietary environment. If you try to go it on your own with their API, you get atrocious results, suggesting there's a lot of proprietary, system prompt-engineering and agent tooling magic happening in their web app. It also mostly ignores any context you try to provide. Copy‑pasting text into the chat simply attaches them as files to your project, which v0 then “summarizes” for the implementing agent, losing critical details. As we covered, this can actually be good news for your apps aesthetic's if you're a bad designer like me 😂 but it also resulted in a lot of mistakes and missed functionality. Overall, it's a phenomenal tool for bootstrapping a UI, but don't expect it to do much else.

GitHub Spark

- Cost to Build: $19/mo (Copilot Business)

- Rating: ★☆☆☆☆

- Documentation

- Code Not Worth Saving

This is GitHub's web-based, v0-like environment for speccing out and generating entire projects.

Experience

This was a major dud. It seems designed for extremely simple projects only. In Hulkastorus's case, it created a single page UI with mediocre design, fake upload spinners, and buttons leading to nowhere. Product and technical requirements were almost completely ignored.

After its initial generation, I made a single request to edit the UI and add a new page. It promptly corrupted its own code with merge conflicts and was unable to recover. I tried to find something nice to say about it, but honestly I can't see a reason to ever use this over v0.

The Side Quests

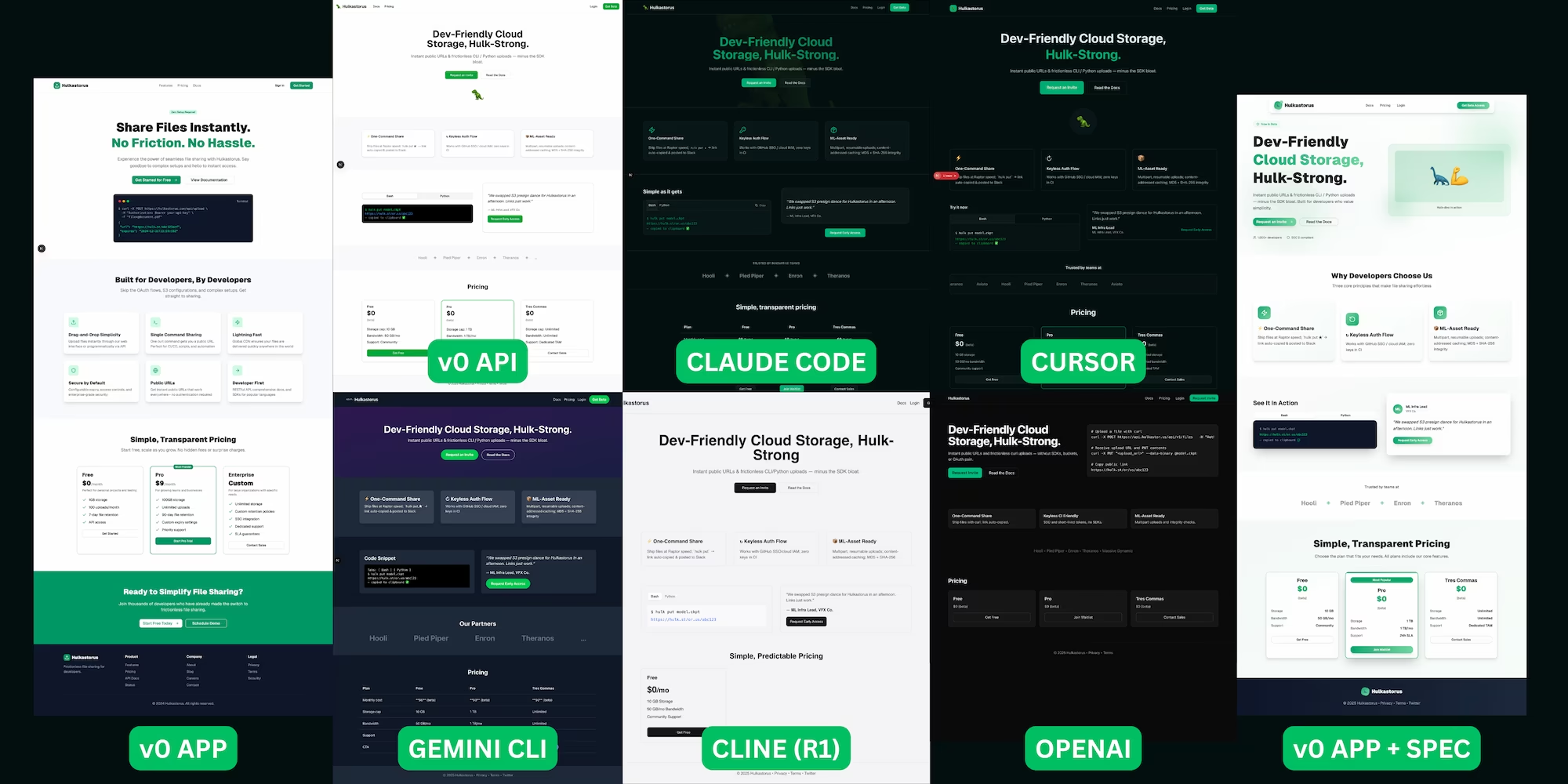

UI Comparison

In my v0 review, I noted how its product and design chops just seemed streets ahead. But you can decide for yourself.

The Hulkastorus homepage as designed by different coding agents and LLMs.

Customization Cheat Sheet

| Agent | Project Configuration | Global Configuration | Supports Conditional Rules | MCP |

|---|---|---|---|---|

| Codex | AGENTS.md | ~/.codex/config.toml | ❌ | ✅ Global |

| Claude | CLAUDE.md | ~/.claude/CLAUDE.md, ~/.claude.json | ½ (Subagents) | ✅ Global, .mcp.json |

| Gemini | GEMINI.md | ~/.gemini/settings.json | ❌ | ✅ Global |

| Devin | UI-driven + Cursor-style rules | UI-driven | ✅ | UI-driven |

| GitHub Spark | UI-driven | ❌ | ❌ | ❌ |

| Aider | .aider.conf.yml | ~/.aider.conf.yml | ❌ | ❌ |

| Cline | .clinerules/*.md | ~/Documents/Cline/Rules/*.md | ½ | ✅ cline_mcp_settings.json |

| Vercel v0 | “Knowledge” tab in UI | ½ | ❌ | ❌ |

| Copilot | .github/instructions/*.instructions.md | UI-driven | ✅ | ✅ UI, .vscode/mcp.json |

| Cursor | .cursor/rules/*.mdc | UI-driven | ✅ | ✅ Global, .cursor/mcp.json |

Token Rates

| Agent | Average Tokens Per PR | Average API Cost Per PR |

|---|---|---|

| Gemini CLI | ~2.4M Tokens | $0.72 (2.5 Flash) |

| Aider | ~600k Tokens | $0.33 (R1) |

| Cline | ~750k Tokens | $0.41 (R1) |

| Devin | 1.87 ACU | $4.21 |

Helpful Resources

- No, AI is not Making Engineers 10× as Productive An AI take grounded in reality that matches many observations here.

- Programming with AI: You’re Probably Doing It Wrong Practical advice on improving AI workflows that's highly actionable for dev teams.

- Context Management Essential for understanding how AI agents can maintain deeper context over sessions.

- Memory Bank Strategy A robust system to persist project state across sessions, useful for long-term AI assistance.

- Building Advanced Software With Agents Examples of instruction systems for how to collaborate with AI more rigorously.

- Subagents (Claude Code) Introduces subagent modularity in Claude Code for scalable, maintainable AI workflows.

The Takeaways

Engineering Best Practices Have Never Been More Important

The product requirements and technical specification documents didn't solve every problem, but they did work wonders for keeping LLMs on track with minimal prompting.

The same exact practices I've preached to junior engineers for years (sensible automated tests, consistency in conventions and development patterns, clear and concise documentation, thorough planning, etc) are the exact same practices that enable LLMs to acquire sufficient context to thrive in a codebase. In fact, as we'll get to in a minute, the less of this you follow, the quicker you're going to find yourself in trouble. Sorry, kids, looks like there's no getting out of testing and docs for you yet! (Though of course the LLMs can help us here too).

Interactive Commands Are Your Worst Nightmare

Most of these tools completely shit the bed when encountering interactive prompts. The ones that try to muscle through (looking at you, Claude Code) still struggle.

This seems inherently solvable though. Prediction: Framework CLIs will soon adopt machine-friendly setup flows. No more interactive prompts, just flags and config files all the way down.

Libraries With Breaking Changes Are Your Second Worst Nightmare

These models mix up APIs constantly, forget which version you're using, and generally have a bad time with anything that isn't stable. This is terrible news for JavaScript 😂 The world of fast fashion frontend frameworks is full of failure fodder for our fickle friends the LLMs.

Prediction: API stability is about to become an OSS kingmaker (the way it probably should have always been). If you change your shit all the time, our new LLM overlords won't be able to use your library effectively. KEEP YOUR APIS STABLE or watch your adoption crater.

LLMs Are Terrible at Project Bootstrapping

There's a reason we have create-next-app and friends. Bootstrapping is a complicated, brittle, and non-creative process! Watching these agents try to initialize a project is painful:

- First, they try the interactive command which fails

- Then, they try to manually install a mismatched set of Next.js / Tailwind / Shadcn versions

- Eventually, they give up, manually place some files, and call it done

- Nothing actually works

To give the LLMs some credit here, this is an impossible ask. Once you've fucked up an init sequence, you're better off nuking everything and starting fresh. The models don't understand this and will spend hours trying to patch their way out of hell because this is what we ask of them.

You Must Babysit Them Constantly

No matter how many times you give them instructions in CAPS, bold, underlines, and italics in your AGENTS.md file, they will still forget. Enforce where you can (agent configuration, git hooks, CI/CD, etc), and watch them like a hawk. I had to tell Claude Code SEVEN times in a row to fix the tests. Six times it thought they were passing. They weren't.

Combinations Are Your Friends

Even though all of them made embarrassing mistakes in isolation, taking the best of each would have been almost flawless. A v0 bootstrap for frontend + Claude Code for backend + an R1 review would be one hell of a combo. Don't feel locked in. With current subsidized rates, use those free tiers to build your own AI coding Voltron on the cheap!

The OOD Drift Problem Comes For Them All

There's a well known problem with autoregressive models like LLMs: error compounding. When a model is trained on expert human data but then uses its own, slightly flawed output as input for the next step, these errors can snowball. A small mistake leads to a bit of a weird state, which leads to an even weirder state the model wasn't trained on, which leads to a catastrophic error.

Here's how I saw this play out over and over again with every single one of these agents:

- Things go really well at first

- LLM makes a bad decision (disables tests, overwrites library typedefs, etc)

- The context of this decision falls out of the window

- Fresh LLM comes in utterly bewildered why the tests are lying to them

- LLM makes increasingly desperate and destructive "fixes"

- Your codebase is now on fire

This is why engineering best practices have never been more important. Step in regularly to keep everything in distribution!

Not a Software Engineer Replacement, Yet

They get stuck in loops. They broadcast passwords in plaintext. They actively sabotage your application to make a bad test pass.

There is definitely still a need for a human-in-the-loop to provide oversight and course-correct even the best of these models when they start drifting into nonsense.

The Winner

And the winner (for me at least) is... Cursor.

This surprised the hell out of me given all the leaderboard stats and discourse on social. Now don't get me wrong, Claude Code was also a monster that I'll probably use actively as well for trickier implementations, but here's why Cursor got the edge:

- Context Magic: Pulls in the right context without being asked more often than the others

- Selective Rules:

.cursor/rulescan target specific files intelligently - IDE Integration: Jump in to fix things yourself as needed, with the agent seamlessly picking up everything you're puttin' down

- Test Horror: This one's probably not fair, but that six hour Claude test fixing session left a really sour taste in my mouth

- Multi-Model: Easy model switching without vendor lock-in, better API rates than Cline

v0 gets a massive honorable mention for its UI generation prowess, and Claude Code is a very, very close second. Like my takeaway suggests, I'll probably be using a combination of these moving forward.

The Losers

The biggest losers in all of this? Lovers of programming 😅

My simmering anxiety from paternity leave has been partially confirmed, but not in the way I expected. I'm not worried about these agents directly taking my job, nor do I think using them makes anyone a "10x engineer" overnight. If you honestly believe these tools make you 10x more productive at the same quality level, I have to assume you're a pretty inexperienced engineer, and the two of us were never really competing for the same roles anyway.

That said, I still dread this new future.

First, the joy is gone. I don't enjoy babysitting a mediocre LLM, but I do very much love coding. I appreciate the art of building a perfect mental model of the problem space and the satisfaction of building something elegant. I don't get that same satisfaction building a project with an LLM agent. If this becomes my job, I'll be very, very sad.

Second, it's a terrible fit for my brand of brain. At worst, it's constant task-switching with zero deep focus. At best, it's just staring at a screen doing lots of waiting for the agent to finish. For someone with ADHD-like behaviors, this is basically my personal hell. No flow state, just an endless stream of "attention here for 2 minutes," "now this agent got stuck over here please," "don't forget to hit continue on this other agent." Kill me now.

Third, and most concerningly, I have to admit it's pretty good. As much as I love to dunk on it for making some really stupid mistakes, most of the code generated by Claude is still, on average, better than the median developer I've met in my career, to make no mention of the breadth of problems that it can tackle. My concern is that these tools will dramatically raise the floor of output for inexperienced "developers" while simultaneously radically reducing the cost required to achieve mediocrity. I have to assume this makes expensive, expert-level services a much less attractive option. I suspect many fewer companies will pay a premium for excellence when you can get low quality but passable for a small, small fraction of the price.

The Silver Lining

If there's one glimmer of hope, it's that my early tests with media ML-focused Python code have performed much worse than these Next.js examples, so maybe I have some time before involuntary retirement. The enterprise world with its legacy systems and domain-specific knowledge will also probably be safe for a little while longer if I need to buy myself a few more years after that.

For now, I'm learning to adopt the best hybrid approach I can to keep my price-to-productivity value prop attractive. Using these tools for tedious refactors while keeping the interesting problems for myself. Letting them write tests and mock data while I design systems. It's a delicate dance.

As has been repeated many times before, the future probably isn't AI replacing programmers entirely. It's AI making mediocre programmers productive enough that experienced programmers become a luxury most companies can't justify. It's not that the last generation will be unemployed necessarily; we'll just be debugging AI-generated code for the rest of our careers. And honestly? That might be worse. But hey, at least they still can't figure out password hashing. So we've got that going for us, which is nice.

Most of all though, I'm going to appreciate the heck out of how fortunate I've been to spend a good chunk of my career getting paid handsomely to do something I genuinely love so much.

Because who knows? Those days might be numbered.

Want to try this experiment yourself? The full PRODUCT.md, SPEC.md, and TASKLIST.md specs are on GitHub. Feel free to use them to evaluate your own favorite coding assistant. Just promise me you'll hash those passwords. Please. For the love of all that is holy, hash the passwords.

Also, if you love or happen to work on any of these tools and want to prove me wrong, my DMs are always open! I'll happily eat crow if your agent can build Hulkastorus to spec the first time. :)